#AI survey tool

Explore tagged Tumblr posts

Text

The Economic Impact of Ineffective Decision-Making in Global Companies

Ineffective decision-making poses significant risks to global companies, impacting financial performance, operational efficiency, strategic competitiveness, and reputational integrity. However, by leveraging AI insights from Xp, companies can mitigate these risks and make more informed, data-driven decisions. As companies continue to embrace AI as a strategic tool, the role of AI in enhancing decision-making and driving long-term success will only continue to grow, shaping the future of business in profound ways.

#market research#ai survey#market analysis#consumerbehavior#data driven decisions#data insights#market trends#machine learning#artificial intelligence#ai powered solutions#ai powered learning platform#ai powered tool#strategic planning#strategic decision making#strategicplanning#strategicmarketing#Decision-Making

0 notes

Text

AI in Tertiary Education: Progress and Prospects

Discover how AI is transforming adult tertiary education in Aotearoa New Zealand. Explore insights from the AARIA research project led by Graeme Smith and Michael Grawe, focusing on personalised learning, ethical AI, and equitable education.

Scoping the Integration of AI in Tertiary Education in Aotearoa New Zealand Artificial Intelligence (AI) is making significant strides in various sectors, and education is no exception. Michael Grawe and I are undertaking the AARIA research project, “Scoping the Integration of AI in Adult Tertiary Education: An Equitable and Outcome-Focused Approach in Aotearoa New Zealand,” which highlights the…

View On WordPress

#AARIA research#adult tertiary education#AI in education#AI integration#AI tools#Ako Aotearoa#algorithmic bias#data privacy#Digital Transformation#educational technology#educator survey#equitable education#Ethical AI#Graeme Smith#Michael Grawe#Māori Education#neurodiverse learners#Pacific education#personalised learning#teaching strategies

0 notes

Text

Phonesites

Turn more of your visitors into customers

Easily build websites, landing pages, surveys, pop-ups, and digital business cards in just 10 minutes. All right from your phone!

Phonesites is the easiest and fastest way to start collecting leads. Create pages from your phone or desktop in minutes.

Use on any Device

Collect Leads

Sell Products

Optimize & Grow

No-Code Builder

500+ Template

Email Automation

1000+ Integrations

Create high-converting landing pages faster

Tools to grow your business easily

Enjoy unlimited websites, traffic, and leads

Training and world-class support

Guided 1:1 orientation call

Weekly workshops

Community support

10k+ teams love Phonesites.

Not convinced yet? We love a challenge.

Get Started FREE

No credit card required!

#ai#ai writer#ai tools#ai generated#artificial intelligence#templates#integrations#intelligenza artificiale#inteligencia artificial#chatgpt#bot#website builder#landing page builder#popup#surveys#digital business card#mobile marketing#lead generation#email automation#ai copywriting#copywriter#text generator#text creator

1 note

·

View note

Text

Not CSP announcing they will be implementing stable diffusion in the next update

#ARTISTA NAO TEM UM DIA DE PAZ NESSA PORRA#at least this is experimental and they will open a survey afterwards#SO THERE IS A CHANCE THEY WILL BACK OUT#i would rather they actually heard artists and fIXED THE GOD DAMN TRANSFORMATION TOOL#I JUST WANT TO RESIZE SOMETHING WITHOUT IT LOOKING AS BLURRY AS THE WORLD WHEN I TAKE OFF MY GLASSES#MEDIBANG CAN KEEP MY LINES CRISP LIKE POTATO CHIP WHY CANT YOU#if they wanted to implement an ai to assist artists#there were SO MANY more ethical ways for them to do that#i can come up with a few#that i think are quite doable#but nooooo lets listen to the techbros instead of the artists that actually use our products#and are the ones affected by stable diffusion's shitteru

0 notes

Text

AI “art” and uncanniness

TOMORROW (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on TOMORROW (May 15), I'm in NORTH HOLLYWOOD for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

When it comes to AI art (or "art"), it's hard to find a nuanced position that respects creative workers' labor rights, free expression, copyright law's vital exceptions and limitations, and aesthetics.

I am, on balance, opposed to AI art, but there are some important caveats to that position. For starters, I think it's unequivocally wrong – as a matter of law – to say that scraping works and training a model with them infringes copyright. This isn't a moral position (I'll get to that in a second), but rather a technical one.

Break down the steps of training a model and it quickly becomes apparent why it's technically wrong to call this a copyright infringement. First, the act of making transient copies of works – even billions of works – is unequivocally fair use. Unless you think search engines and the Internet Archive shouldn't exist, then you should support scraping at scale:

https://pluralistic.net/2023/09/17/how-to-think-about-scraping/

And unless you think that Facebook should be allowed to use the law to block projects like Ad Observer, which gathers samples of paid political disinformation, then you should support scraping at scale, even when the site being scraped objects (at least sometimes):

https://pluralistic.net/2021/08/06/get-you-coming-and-going/#potemkin-research-program

After making transient copies of lots of works, the next step in AI training is to subject them to mathematical analysis. Again, this isn't a copyright violation.

Making quantitative observations about works is a longstanding, respected and important tool for criticism, analysis, archiving and new acts of creation. Measuring the steady contraction of the vocabulary in successive Agatha Christie novels turns out to offer a fascinating window into her dementia:

https://www.theguardian.com/books/2009/apr/03/agatha-christie-alzheimers-research

Programmatic analysis of scraped online speech is also critical to the burgeoning formal analyses of the language spoken by minorities, producing a vibrant account of the rigorous grammar of dialects that have long been dismissed as "slang":

https://www.researchgate.net/publication/373950278_Lexicogrammatical_Analysis_on_African-American_Vernacular_English_Spoken_by_African-Amecian_You-Tubers

Since 1988, UCL Survey of English Language has maintained its "International Corpus of English," and scholars have plumbed its depth to draw important conclusions about the wide variety of Englishes spoken around the world, especially in postcolonial English-speaking countries:

https://www.ucl.ac.uk/english-usage/projects/ice.htm

The final step in training a model is publishing the conclusions of the quantitative analysis of the temporarily copied documents as software code. Code itself is a form of expressive speech – and that expressivity is key to the fight for privacy, because the fact that code is speech limits how governments can censor software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech/

Are models infringing? Well, they certainly can be. In some cases, it's clear that models "memorized" some of the data in their training set, making the fair use, transient copy into an infringing, permanent one. That's generally considered to be the result of a programming error, and it could certainly be prevented (say, by comparing the model to the training data and removing any memorizations that appear).

Not every seeming act of memorization is a memorization, though. While specific models vary widely, the amount of data from each training item retained by the model is very small. For example, Midjourney retains about one byte of information from each image in its training data. If we're talking about a typical low-resolution web image of say, 300kb, that would be one three-hundred-thousandth (0.0000033%) of the original image.

Typically in copyright discussions, when one work contains 0.0000033% of another work, we don't even raise the question of fair use. Rather, we dismiss the use as de minimis (short for de minimis non curat lex or "The law does not concern itself with trifles"):

https://en.wikipedia.org/wiki/De_minimis

Busting someone who takes 0.0000033% of your work for copyright infringement is like swearing out a trespassing complaint against someone because the edge of their shoe touched one blade of grass on your lawn.

But some works or elements of work appear many times online. For example, the Getty Images watermark appears on millions of similar images of people standing on red carpets and runways, so a model that takes even in infinitesimal sample of each one of those works might still end up being able to produce a whole, recognizable Getty Images watermark.

The same is true for wire-service articles or other widely syndicated texts: there might be dozens or even hundreds of copies of these works in training data, resulting in the memorization of long passages from them.

This might be infringing (we're getting into some gnarly, unprecedented territory here), but again, even if it is, it wouldn't be a big hardship for model makers to post-process their models by comparing them to the training set, deleting any inadvertent memorizations. Even if the resulting model had zero memorizations, this would do nothing to alleviate the (legitimate) concerns of creative workers about the creation and use of these models.

So here's the first nuance in the AI art debate: as a technical matter, training a model isn't a copyright infringement. Creative workers who hope that they can use copyright law to prevent AI from changing the creative labor market are likely to be very disappointed in court:

https://www.hollywoodreporter.com/business/business-news/sarah-silverman-lawsuit-ai-meta-1235669403/

But copyright law isn't a fixed, eternal entity. We write new copyright laws all the time. If current copyright law doesn't prevent the creation of models, what about a future copyright law?

Well, sure, that's a possibility. The first thing to consider is the possible collateral damage of such a law. The legal space for scraping enables a wide range of scholarly, archival, organizational and critical purposes. We'd have to be very careful not to inadvertently ban, say, the scraping of a politician's campaign website, lest we enable liars to run for office and renege on their promises, while they insist that they never made those promises in the first place. We wouldn't want to abolish search engines, or stop creators from scraping their own work off sites that are going away or changing their terms of service.

Now, onto quantitative analysis: counting words and measuring pixels are not activities that you should need permission to perform, with or without a computer, even if the person whose words or pixels you're counting doesn't want you to. You should be able to look as hard as you want at the pixels in Kate Middleton's family photos, or track the rise and fall of the Oxford comma, and you shouldn't need anyone's permission to do so.

Finally, there's publishing the model. There are plenty of published mathematical analyses of large corpuses that are useful and unobjectionable. I love me a good Google n-gram:

https://books.google.com/ngrams/graph?content=fantods%2C+heebie-jeebies&year_start=1800&year_end=2019&corpus=en-2019&smoothing=3

And large language models fill all kinds of important niches, like the Human Rights Data Analysis Group's LLM-based work helping the Innocence Project New Orleans' extract data from wrongful conviction case files:

https://hrdag.org/tech-notes/large-language-models-IPNO.html

So that's nuance number two: if we decide to make a new copyright law, we'll need to be very sure that we don't accidentally crush these beneficial activities that don't undermine artistic labor markets.

This brings me to the most important point: passing a new copyright law that requires permission to train an AI won't help creative workers get paid or protect our jobs.

Getty Images pays photographers the least it can get away with. Publishers contracts have transformed by inches into miles-long, ghastly rights grabs that take everything from writers, but still shifts legal risks onto them:

https://pluralistic.net/2022/06/19/reasonable-agreement/

Publishers like the New York Times bitterly oppose their writers' unions:

https://actionnetwork.org/letters/new-york-times-stop-union-busting

These large corporations already control the copyrights to gigantic amounts of training data, and they have means, motive and opportunity to license these works for training a model in order to pay us less, and they are engaged in this activity right now:

https://www.nytimes.com/2023/12/22/technology/apple-ai-news-publishers.html

Big games studios are already acting as though there was a copyright in training data, and requiring their voice actors to begin every recording session with words to the effect of, "I hereby grant permission to train an AI with my voice" and if you don't like it, you can hit the bricks:

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

If you're a creative worker hoping to pay your bills, it doesn't matter whether your wages are eroded by a model produced without paying your employer for the right to do so, or whether your employer got to double dip by selling your work to an AI company to train a model, and then used that model to fire you or erode your wages:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

Individual creative workers rarely have any bargaining leverage over the corporations that license our copyrights. That's why copyright's 40-year expansion (in duration, scope, statutory damages) has resulted in larger, more profitable entertainment companies, and lower payments – in real terms and as a share of the income generated by their work – for creative workers.

As Rebecca Giblin and I write in our book Chokepoint Capitalism, giving creative workers more rights to bargain with against giant corporations that control access to our audiences is like giving your bullied schoolkid extra lunch money – it's just a roundabout way of transferring that money to the bullies:

https://pluralistic.net/2022/08/21/what-is-chokepoint-capitalism/

There's an historical precedent for this struggle – the fight over music sampling. 40 years ago, it wasn't clear whether sampling required a copyright license, and early hip-hop artists took samples without permission, the way a horn player might drop a couple bars of a well-known song into a solo.

Many artists were rightfully furious over this. The "heritage acts" (the music industry's euphemism for "Black people") who were most sampled had been given very bad deals and had seen very little of the fortunes generated by their creative labor. Many of them were desperately poor, despite having made millions for their labels. When other musicians started making money off that work, they got mad.

In the decades that followed, the system for sampling changed, partly through court cases and partly through the commercial terms set by the Big Three labels: Sony, Warner and Universal, who control 70% of all music recordings. Today, you generally can't sample without signing up to one of the Big Three (they are reluctant to deal with indies), and that means taking their standard deal, which is very bad, and also signs away your right to control your samples.

So a musician who wants to sample has to sign the bad terms offered by a Big Three label, and then hand $500 out of their advance to one of those Big Three labels for the sample license. That $500 typically doesn't go to another artist – it goes to the label, who share it around their executives and investors. This is a system that makes every artist poorer.

But it gets worse. Putting a price on samples changes the kind of music that can be economically viable. If you wanted to clear all the samples on an album like Public Enemy's "It Takes a Nation of Millions To Hold Us Back," or the Beastie Boys' "Paul's Boutique," you'd have to sell every CD for $150, just to break even:

https://memex.craphound.com/2011/07/08/creative-license-how-the-hell-did-sampling-get-so-screwed-up-and-what-the-hell-do-we-do-about-it/

Sampling licenses don't just make every artist financially worse off, they also prevent the creation of music of the sort that millions of people enjoy. But it gets even worse. Some older, sample-heavy music can't be cleared. Most of De La Soul's catalog wasn't available for 15 years, and even though some of their seminal music came back in March 2022, the band's frontman Trugoy the Dove didn't live to see it – he died in February 2022:

https://www.vulture.com/2023/02/de-la-soul-trugoy-the-dove-dead-at-54.html

This is the third nuance: even if we can craft a model-banning copyright system that doesn't catch a lot of dolphins in its tuna net, it could still make artists poorer off.

Back when sampling started, it wasn't clear whether it would ever be considered artistically important. Early sampling was crude and experimental. Musicians who trained for years to master an instrument were dismissive of the idea that clicking a mouse was "making music." Today, most of us don't question the idea that sampling can produce meaningful art – even musicians who believe in licensing samples.

Having lived through that era, I'm prepared to believe that maybe I'll look back on AI "art" and say, "damn, I can't believe I never thought that could be real art."

But I wouldn't give odds on it.

I don't like AI art. I find it anodyne, boring. As Henry Farrell writes, it's uncanny, and not in a good way:

https://www.programmablemutter.com/p/large-language-models-are-uncanny

Farrell likens the work produced by AIs to the movement of a Ouija board's planchette, something that "seems to have a life of its own, even though its motion is a collective side-effect of the motions of the people whose fingers lightly rest on top of it." This is "spooky-action-at-a-close-up," transforming "collective inputs … into apparently quite specific outputs that are not the intended creation of any conscious mind."

Look, art is irrational in the sense that it speaks to us at some non-rational, or sub-rational level. Caring about the tribulations of imaginary people or being fascinated by pictures of things that don't exist (or that aren't even recognizable) doesn't make any sense. There's a way in which all art is like an optical illusion for our cognition, an imaginary thing that captures us the way a real thing might.

But art is amazing. Making art and experiencing art makes us feel big, numinous, irreducible emotions. Making art keeps me sane. Experiencing art is a precondition for all the joy in my life. Having spent most of my life as a working artist, I've come to the conclusion that the reason for this is that art transmits an approximation of some big, numinous irreducible emotion from an artist's mind to our own. That's it: that's why art is amazing.

AI doesn't have a mind. It doesn't have an intention. The aesthetic choices made by AI aren't choices, they're averages. As Farrell writes, "LLM art sometimes seems to communicate a message, as art does, but it is unclear where that message comes from, or what it means. If it has any meaning at all, it is a meaning that does not stem from organizing intention" (emphasis mine).

Farrell cites Mark Fisher's The Weird and the Eerie, which defines "weird" in easy to understand terms ("that which does not belong") but really grapples with "eerie."

For Fisher, eeriness is "when there is something present where there should be nothing, or is there is nothing present when there should be something." AI art produces the seeming of intention without intending anything. It appears to be an agent, but it has no agency. It's eerie.

Fisher talks about capitalism as eerie. Capital is "conjured out of nothing" but "exerts more influence than any allegedly substantial entity." The "invisible hand" shapes our lives more than any person. The invisible hand is fucking eerie. Capitalism is a system in which insubstantial non-things – corporations – appear to act with intention, often at odds with the intentions of the human beings carrying out those actions.

So will AI art ever be art? I don't know. There's a long tradition of using random or irrational or impersonal inputs as the starting point for human acts of artistic creativity. Think of divination:

https://pluralistic.net/2022/07/31/divination/

Or Brian Eno's Oblique Strategies:

http://stoney.sb.org/eno/oblique.html

I love making my little collages for this blog, though I wouldn't call them important art. Nevertheless, piecing together bits of other peoples' work can make fantastic, important work of historical note:

https://www.johnheartfield.com/John-Heartfield-Exhibition/john-heartfield-art/famous-anti-fascist-art/heartfield-posters-aiz

Even though painstakingly cutting out tiny elements from others' images can be a meditative and educational experience, I don't think that using tiny scissors or the lasso tool is what defines the "art" in collage. If you can automate some of this process, it could still be art.

Here's what I do know. Creating an individual bargainable copyright over training will not improve the material conditions of artists' lives – all it will do is change the relative shares of the value we create, shifting some of that value from tech companies that hate us and want us to starve to entertainment companies that hate us and want us to starve.

As an artist, I'm foursquare against anything that stands in the way of making art. As an artistic worker, I'm entirely committed to things that help workers get a fair share of the money their work creates, feed their families and pay their rent.

I think today's AI art is bad, and I think tomorrow's AI art will probably be bad, but even if you disagree (with either proposition), I hope you'll agree that we should be focused on making sure art is legal to make and that artists get paid for it.

Just because copyright won't fix the creative labor market, it doesn't follow that nothing will. If we're worried about labor issues, we can look to labor law to improve our conditions. That's what the Hollywood writers did, in their groundbreaking 2023 strike:

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Now, the writers had an advantage: they are able to engage in "sectoral bargaining," where a union bargains with all the major employers at once. That's illegal in nearly every other kind of labor market. But if we're willing to entertain the possibility of getting a new copyright law passed (that won't make artists better off), why not the possibility of passing a new labor law (that will)? Sure, our bosses won't lobby alongside of us for more labor protection, the way they would for more copyright (think for a moment about what that says about who benefits from copyright versus labor law expansion).

But all workers benefit from expanded labor protection. Rather than going to Congress alongside our bosses from the studios and labels and publishers to demand more copyright, we could go to Congress alongside every kind of worker, from fast-food cashiers to publishing assistants to truck drivers to demand the right to sectoral bargaining. That's a hell of a coalition.

And if we do want to tinker with copyright to change the way training works, let's look at collective licensing, which can't be bargained away, rather than individual rights that can be confiscated at the entrance to our publisher, label or studio's offices. These collective licenses have been a huge success in protecting creative workers:

https://pluralistic.net/2023/02/26/united-we-stand/

Then there's copyright's wildest wild card: The US Copyright Office has repeatedly stated that works made by AIs aren't eligible for copyright, which is the exclusive purview of works of human authorship. This has been affirmed by courts:

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

Neither AI companies nor entertainment companies will pay creative workers if they don't have to. But for any company contemplating selling an AI-generated work, the fact that it is born in the public domain presents a substantial hurdle, because anyone else is free to take that work and sell it or give it away.

Whether or not AI "art" will ever be good art isn't what our bosses are thinking about when they pay for AI licenses: rather, they are calculating that they have so much market power that they can sell whatever slop the AI makes, and pay less for the AI license than they would make for a human artist's work. As is the case in every industry, AI can't do an artist's job, but an AI salesman can convince an artist's boss to fire the creative worker and replace them with AI:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

They don't care if it's slop – they just care about their bottom line. A studio executive who cancels a widely anticipated film prior to its release to get a tax-credit isn't thinking about artistic integrity. They care about one thing: money. The fact that AI works can be freely copied, sold or given away may not mean much to a creative worker who actually makes their own art, but I assure you, it's the only thing that matters to our bosses.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/13/spooky-action-at-a-close-up/#invisible-hand

#pluralistic#ai art#eerie#ai#weird#henry farrell#copyright#copyfight#creative labor markets#what is art#ideomotor response#mark fisher#invisible hand#uncanniness#prompting

266 notes

·

View notes

Text

In early 2020, deepfake expert Henry Ajder uncovered one of the first Telegram bots built to “undress” photos of women using artificial intelligence. At the time, Ajder recalls, the bot had been used to generate more than 100,000 explicit photos—including those of children—and its development marked a “watershed” moment for the horrors deepfakes could create. Since then, deepfakes have become more prevalent, more damaging, and easier to produce.

Now, a WIRED review of Telegram communities involved with the explicit nonconsensual content has identified at least 50 bots that claim to create explicit photos or videos of people with only a couple of clicks. The bots vary in capabilities, with many suggesting they can “remove clothes” from photos while others claim to create images depicting people in various sexual acts.

The 50 bots list more than 4 million “monthly users” combined, according to WIRED's review of the statistics presented by each bot. Two bots listed more than 400,000 monthly users each, while another 14 listed more than 100,000 members each. The findings illustrate how widespread explicit deepfake creation tools have become and reinforce Telegram’s place as one of the most prominent locations where they can be found. However, the snapshot, which largely encompasses English-language bots, is likely a small portion of the overall deepfake bots on Telegram.

“We’re talking about a significant, orders-of-magnitude increase in the number of people who are clearly actively using and creating this kind of content,” Ajder says of the Telegram bots. “It is really concerning that these tools—which are really ruining lives and creating a very nightmarish scenario primarily for young girls and for women—are still so easy to access and to find on the surface web, on one of the biggest apps in the world.”

Explicit nonconsensual deepfake content, which is often referred to as nonconsensual intimate image abuse (NCII), has exploded since it first emerged at the end of 2017, with generative AI advancements helping fuel recent growth. Across the internet, a slurry of “nudify” and “undress” websites sit alongside more sophisticated tools and Telegram bots, and are being used to target thousands of women and girls around the world—from Italy’s prime minister to school girls in South Korea. In one recent survey, a reported 40 percent of US students were aware of deepfakes linked to their K-12 schools in the last year.

The Telegram bots identified by WIRED are supported by at least 25 associated Telegram channels—where people can subscribe to newsfeed-style updates—that have more than 3 million combined members. The Telegram channels alert people about new features provided by the bots and special offers on “tokens” that can be purchased to operate them, and often act as places where people using the bots can find links to new ones if they are removed by Telegram.

After WIRED contacted Telegram with questions about whether it allows explicit deepfake content creation on its platform, the company deleted the 75 bots and channels WIRED identified. The company did not respond to a series of questions or comment on why it had removed the channels.

Additional nonconsensual deepfake Telegram channels and bots later identified by WIRED show the scale of the problem. Several channel owners posted that their bots had been taken down, with one saying, “We will make another bot tomorrow.” Those accounts were also later deleted.

Hiding in Plain Sight

Telegram bots are, essentially, small apps that run inside of Telegram. They sit alongside the app’s channels, which can broadcast messages to an unlimited number of subscribers; groups where up to 200,000 people can interact; and one-to-one messages. Developers have created bots where people take trivia quizzes, translate messages, create alerts, or start Zoom meetings. They’ve also been co-opted for creating abusive deepfakes.

Due to the harmful nature of the deepfake tools, WIRED did not test the Telegram bots and is not naming specific bots or channels. While the bots had millions of monthly users, according to Telegram’s statistics, it is unclear how many images the bots may have been used to create. Some users, who could be in multiple channels and bots, may have created zero images; others could have created hundreds.

Many of the deepfake bots viewed by WIRED are clear about what they have been created to do. The bots’ names and descriptions refer to nudity and removing women’s clothes. “I can do anything you want about the face or clothes of the photo you give me,” the creators’ of one bot wrote. “Experience the shock brought by AI,” another says. Telegram can also show “similar channels” in its recommendation tool, helping potential users bounce between channels and bots.

Almost all of the bots require people to buy “tokens” to create images, and it is unclear if they operate in the ways they claim. As the ecosystem around deepfake generation has flourished in recent years, it has become a potentially lucrative source of income for those who create websites, apps, and bots. So many people are trying to use “nudify” websites that Russian cybercriminals, as reported by 404Media, have started creating fake websites to infect people with malware.

While the first Telegram bots, identified several years ago, were relatively rudimentary, the technology needed to create more realistic AI-generated images has improved—and some of the bots are hiding in plain sight.

One bot with more than 300,000 monthly users did not reference any explicit material in its name or landing page. However, once a user clicks to use the bot, it claims it has more than 40 options for images, many of which are highly sexual in nature. That same bot has a user guide, hosted on the web outside of Telegram, describing how to create the highest-quality images. Bot developers can require users to accept terms of service, which may forbid users from uploading images without the consent of the person depicted or images of children, but there appears to be little or no enforcement of these rules.

Another bot, which had more than 38,000 users, claimed people could send six images of the same man or woman—it is one of a small number that claims to create images of men—to “train” an AI model, which could then create new deepfake images of that individual. Once users joined one bot, it would present a menu of 11 “other bots” from the creators, likely to keep systems online and try to avoid removals.

“These types of fake images can harm a person’s health and well-being by causing psychological trauma and feelings of humiliation, fear, embarrassment, and shame,” says Emma Pickering, the head of technology-facilitated abuse and economic empowerment at Refuge, the UK’s largest domestic abuse organization. “While this form of abuse is common, perpetrators are rarely held to account, and we know this type of abuse is becoming increasingly common in intimate partner relationships.”

As explicit deepfakes have become easier to create and more prevalent, lawmakers and tech companies have been slow to stem the tide. Across the US, 23 states have passed laws to address nonconsensual deepfakes, and tech companies have bolstered some policies. However, apps that can create explicit deepfakes have been found in Apple and Google’s app stores, explicit deepfakes of Taylor Swift were widely shared on X in January, and Big Tech sign-in infrastructure has allowed people to easily create accounts on deepfake websites.

Kate Ruane, director of the Center for Democracy and Technology’s free expression project, says most major technology platforms now have policies prohibiting nonconsensual distribution of intimate images, with many of the biggest agreeing to principles to tackle deepfakes. “I would say that it’s actually not clear whether nonconsensual intimate image creation or distribution is prohibited on the platform,” Ruane says of Telegram’s terms of service, which are less detailed than other major tech platforms.

Telegram’s approach to removing harmful content has long been criticized by civil society groups, with the platform historically hosting scammers, extreme right-wing groups, and terrorism-related content. Since Telegram CEO and founder Pavel Durov was arrested and charged in France in August relating to a range of potential offenses, Telegram has started to make some changes to its terms of service and provide data to law enforcement agencies. The company did not respond to WIRED’s questions about whether it specifically prohibits explicit deepfakes.

Execute the Harm

Ajder, the researcher who discovered deepfake Telegram bots four years ago, says the app is almost uniquely positioned for deepfake abuse. “Telegram provides you with the search functionality, so it allows you to identify communities, chats, and bots,” Ajder says. “It provides the bot-hosting functionality, so it's somewhere that provides the tooling in effect. Then it’s also the place where you can share it and actually execute the harm in terms of the end result.”

In late September, several deepfake channels started posting that Telegram had removed their bots. It is unclear what prompted the removals. On September 30, a channel with 295,000 subscribers posted that Telegram had “banned” its bots, but it posted a new bot link for users to use. (The channel was removed after WIRED sent questions to Telegram.)

“One of the things that’s really concerning about apps like Telegram is that it is so difficult to track and monitor, particularly from the perspective of survivors,” says Elena Michael, the cofounder and director of #NotYourPorn, a campaign group working to protect people from image-based sexual abuse.

Michael says Telegram has been “notoriously difficult” to discuss safety issues with, but notes there has been some progress from the company in recent years. However, she says the company should be more proactive in moderating and filtering out content itself.

“Imagine if you were a survivor who’s having to do that themselves, surely the burden shouldn't be on an individual,” Michael says. “Surely the burden should be on the company to put something in place that's proactive rather than reactive.”

56 notes

·

View notes

Text

Valve news and the AI

So. I assume people saw some posts going around on how valve has new AI rules, and things getting axed. And because we live in a society, I went down the rabbit hole to learn my information for myself. Here's what I found, under a cut to keep it easier. To start off, I am not a proponent of AI. I just don't like misinformation. So. Onwards.

VALVE AND THE AI

First off, no, AI will not take things over. Let me show you, supplemented by the official valve news post from here. (because if hbomberguy taught us anything it is to cite your sources)

[Image id: a screenshot from the official valve blog. It says the following:

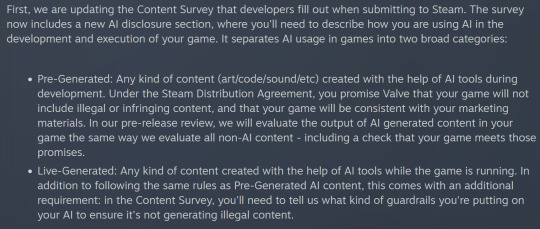

First, we are updating the Content Survey that developers fill out when submitting to Steam. The survey now includes a new AI disclosure section, where you'll need to describe how you are using AI in the development and execution of your game. It separates AI usage in games into two broad categories:

Pre-Generated: Any kind of content (art/code/sound/etc) created with the help of AI tools during development. Under the Steam Distribution Agreement, you promise Valve that your game will not include illegal or infringing content, and that your game will be consistent with your marketing materials. In our pre-release review, we will evaluate the output of AI generated content in your game the same way we evaluate all non-AI content - including a check that your game meets those promises.

Live-Generated: Any kind of content created with the help of AI tools while the game is running. In addition to following the same rules as Pre-Generated AI content, this comes with an additional requirement: in the Content Survey, you'll need to tell us what kind of guardrails you're putting on your AI to ensure it's not generating illegal content. End image ID]

So. Let us break that down a bit, shall we? Valve has been workshopping these new AI rules since last June, and had adopted a wait and see approach beforehand. This had cost them a bit of revenue, which is not ideal if you are a company. Now they have settled on a set of rules. Rules that are relatively easy to understand. - Rule one: Game devs have to disclose when their game has AI - Rule two: If your game uses AI, you have to say what kind it uses. Did you generate the assets ahead of time, and they stay like that? Or are they actively generated as the consumer plays? - Rule three: You need to tell Valve the guardrails you have to make sure your live-generating AI doesn't do things that are going against the law. - Rule four: If you use pre-generated assets, then your assets cannot violate copyright. Valve will check to make sure that you aren't actually lying.

That doesn't sound too bad now, does it? This is a way Valve can keep going. Because they will need to. And ignoring AI is, as much as we all hate it, not going to work. They need to face it. And they did. So. Onto part two, shall we?

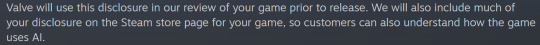

[Image ID: a screenshot from the official Valve blog. It says the following: Valve will use this disclosure in our review of your game prior to release. We will also include much of your disclosure on the Steam store page for your game, so customers can also understand how the game uses AI. End image ID]

Let's break that down. - Valve will show you if games use AI. Because they want you to know that. Because that is transparency.

Part three.

[Image ID: A screenshot from the official Valve blog. It says the following:

Second, we're releasing a new system on Steam that allows players to report illegal content inside games that contain Live-Generated AI content. Using the in-game overlay, players can easily submit a report when they encounter content that they believe should have been caught by appropriate guardrails on AI generation.

Today's changes are the result of us improving our understanding of the landscape and risks in this space, as well as talking to game developers using AI, and those building AI tools. This will allow us to be much more open to releasing games using AI technology on Steam. The only exception to this will be Adult Only Sexual Content that is created with Live-Generated AI - we are unable to release that type of content right now. End Image ID]

Now onto the chunks.

Valve is releasing a new system that makes it easier to report questionable AI content. Specifically live-generated AI content. You can easily access it by steam overlay, and it will be an easier way to report than it has been so far.

Valve is prohibiting NSFW content with live-generating AI. Meaning there won't be AI generated porn, and AI companions for NSWF content are not allowed.

That doesn't sound bad, does it? They made some rules so they can get revenue so they can keep their service going, while also making it obvious for people when AI is used. Alright? Alright. Now calm down. Get yourself a drink.

---

Team Fortress Source 2

My used source here is this.

There was in fact a DCMA takedown notice. But it is not the only thing that led to the takedown. To sum things up: There were issues with the engine, and large parts of the code became unusable. The dev team decided that the notice was merely the final nail in the coffin, and decided to take it down. So that is that. I don't know more on this, so I will not say more, because I don't want to spread misinformation and speculation. I want to keep some credibility, please and thanks.

---

Portal Demake axed

Sources used are from here, here and here.

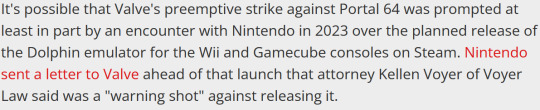

Portal 64 got axed. Why? Because it has to do with Nintendo. The remake uses a Nintendo library. And one that got extensively pirated at that. And we all know how trigger-happy Nintendo is with it's intellectual property. And Nintendo is not exactly happy with Valve and Steam, and sent them a letter in 2023.

[Image ID: a screenshot from a PC-Gamer article. It says the following: It's possible that Valve's preemptive strike against Portal 64 was prompted at least in part by an encounter with Nintendo in 2023 over the planned release of the Dolphin emulator for the Wii and Gamecube consoles on Steam. Nintendo sent a letter to Valve ahead of that launch that attorney Kellen Voyer of Voyer Law said was a "warning shot" against releasing it. End Image ID.]

So. Yeah. Nintendo doesn't like people doing things with their IP. Valve is most likely avoiding potential lawsuits, both for themselves and Lambert, the dev behind Portal 64. Nintendo is an enemy one doesn't want to have. Valve is walking the "better safe than sorry" path here.

---

There we go. This is my "let's try and clear up some misinformation" post. I am now going to play a game, because this took the better part of an hour. I cited my sources. Auf Wiedersehen.

159 notes

·

View notes

Text

Story writing: The Assassin Lesson

Greetings everyone. I am trying to get back some story ideas of heart back in my mind with AI support.

I understand that many people feel resistant to AI currently, but I think it could be a chance for some of my buried ideas digging back to light. I think it should be OK for make use of it for drafting and brainstorming. Wish you will accept it and like it.

-------------------------------------------------------

The Assassin Lesson

In a training site of an assassin group, the mentor lady of the group stood before her class of aspiring young assassins. The leather suit covered by hooded cloak outlined her beautiful body curves. Her piercing gaze surveyed the room, which cause the atmosphere become thick and heavy, but brought a hint of anticipation to the class.

As one of the master of assassin in the group, the lesson of the mentor lady was focusing on the fatal spots of the human body. Before she began her lesson, she brought a beautiful female with a slender figure to her students. She was a young thief captured in an incidental encounter during a mission. Her upper body had been stripped naked, with her wrists bound with tight restraints, stood at the front of the class. Her eyes wide with fear.

"Today, we shall delve into the skill of piercing the human heart."

The mentor lady began, her low and commanding tone sending shivers down the spines of her students. With a swift motion, she spread out a drawing of a human heart, its delicate form sketched meticulously on a piece of parchment.

Walking towards the captive, the mentor caressed the girl carefully, and made use of some simple drawing tool against her bare chest. Soon, a line art appeared between her petite but firm breasts, aligning it with the actual size and position of her ribcage and her heart beneath. The students leaned forward, their eyes fixated on the scene unfolding before them.

"Now, observe," the mentor said, her voice unwavering.

"The human heart was protected beneath the ribcage, nestled within the chest cavity. To truly strike a fatal blow, one must understand its position and structure."

She pointed to the various parts of the heart drawing on the captive, her finger tracing the major arteries and ventricles. The young thief’s chest rose and fell rapidly, her breath shallow and uneven. Which felt like the mentor’s finger directly touching her myocardium.

"The atria, the ventricles, the aorta," the mentor continued, her voice filled with an unsettling mix of knowledge and detached fascination. "Each component is vital to the heart's function, and each represents a potential fatal spot."

The young thief visibly trembled, her eyes darting around the room, searching for an escape that was not forthcoming.

"One wrong move, and the heart's delicate rhythm is disrupted," the mentor said, her voice dropping to a chilling whisper. "A swift and precise strike, however, can send the body into an irreversible state of shock."

At this point, the mentor paused, allowing her words to hang in the air, the weight of her lesson sinking in. The students exchanged glances, fully aware of the power they were being entrusted with.

"Now, my dear students," the mentor said, her voice rising with an unsettling intensity, "let me introduce the tools we mainly use for piercing the heart.”

The mentor's eyes gleamed with an aggressive pleasure as she revealed an array of common weapons used on the table with a quick motion. As she began explaining each weapon in meticulous detail, the captured girl's terror was palpable, her eyes widening in fear as she gazed upon the deadly tools before her. Feeling as if these sharp edges had already torn her horrified heart.

"First, we have the thin, needle-like stiletto blade," the mentor said, her voice dripping with a chilling enthusiasm. "Its slender form allows for precise entry, slipping between the ribs without causing unnecessary damage."

As she spoke, the mentor demonstrated the correct posture for piercing, gently pressing the stiletto against the girl's exposed skin, mirroring the intended action. The girl's heart beat erratically, a visible thumping against her left breast. She shivered, her body tensing involuntarily at the sensation, a cold sweat forming on her forehead.

"Next, we have the wickedly serrated dagger," the mentor continued, her voice filled with a sinister delight. "Its jagged edges can tear through flesh and bone, ensuring a quick and devastating stab."

With a swift motion, the mentor mimicked the piercing action on the girl's skin, her hand moving in a delicate manner. The young thief let out a stifled gasp, her heart pounding even harder in her chest, as if resisting the impending violence. Beads of crimson blood welled up where the blade had made contact, as a testament to the sharpness of the weapon and the fragility of human flesh.

The mentor's eyes narrowed, relishing in the power that played out before her. She continued her lesson, each weapon explained and demonstrated with excellent precision.

"Now, behold the slender yet deadly rapier," the mentor said, her voice taking on a haunting resonance. "Its long, piercing blade can navigate the narrowest of spaces, reaching the heart with deadly accuracy."

The mentor positioned the rapier against the girl's skin, her hand poised to demonstrate the thrusting motion. The captive's breathing grew shallow, her body trembling uncontrollably under the weight of her fear. As the mentor made a swift but soft thrust, the young heart skipped a beat, as if mirroring the terror coursing through her veins.

As the mentor moved through the remaining weapons, the captured girl's terror only intensified. The mentor's explanations were accompanied by demonstrations on the girl's soft skin, each movement were calculated and precise. The pain and fear etched on the captive's face mirrored the darkness hidden within the mentor's own soul.

"In the next section," the mentor lady paused a second, staring at the captive. "We are to demonstrate the precise locations where the weapons should enter the body, piercing the heart." The terrified thief stood frozen, her eyes wide with fear, as the mentor approached her with a gaze of dominance.

"Pay close attention, my dear students," the mentor commanded, her voice laced with an eerie calmness. "As we delved before, the human heart was well protected within the chest cavity. To penetrate the heart efficiently, we must aim for specific entry points. Allow me to explain."

The mentor positioned herself behind the captive, placing her hands on the girl's shoulders, as if guiding her through the macabre lesson. The captive's body trembled beneath the mentor's touch, her breath was quick and shallow.

"First," the mentor began, her voice resonating with authority, "We have the area between the 3rd and 4th rib, near the sternum. This position allows for a quick and efficient stab, aiming directly at the center of the heart's chambers."

With precise movements, the mentor's hand mimicked the action of a weapon, her fingers hovering just above the inner side of the captive's left breast, indicating the location. The captive flinched, a shiver coursing through her body, as if she could feel the cold steel of an imaginary blade piercing her flesh.

"Next," the mentor continued, her voice low and steady, "we have the space between the 4th and 5th rib, commonly known as the apex of the heart. Representing the tip of the left and right ventricles. Striking here can disrupt the heart's rhythm and lead to swift incapacitation," the mentor paused a bit, "And this is actually my favorite piercing spot."

The mentor's hand shifted slightly lower, held tightly under the left breast of the young thief. Her heart raced in response, the rumbling apex hammering against the palm of the mentor. She bit her trembling lip, her eyes darting nervously between the assassin students and the weapons displayed on the table.

"Moving on," the mentor said, her tone filled with a chilling precision, "we have the area below the xiphoid, right below the heart. Here is the blind spot of the ribcage coverage. A well-placed strike here can cause severe damage from the bottom of right ventricle."

The mentor's hand descended further, hovering just above the captive's abdomen, her fingers poised as if preparing to strike. The captive's breath hitched, her body tensing as if bracing for impact. The room seemed to grow colder as she saw the focused eyes of the assassin students.

"And finally," the mentor concluded, her voice dropping to a chilling whisper, "We have the area over the clavicle. This position allows us to bypass most of the chest armor and ribcage, to penetrate the atria and aorta directly, provided the weapon is long enough."

The mentor's hand moved to the captive's collarbone area, caressed the pulsating veins underneath. The captive's eyes widened, a mix of terror and realization reflecting in their depths. The mentor's teachings had painted a dark path ahead, one that demanded a cold and calculated approach for her fellows students.

"And NEXT..." the mentor scanned the room, her eyes flickering with amusement.

"Is the time for PRACTICE."

Hearing this, the captured girl’s heart sank to the bottom of abyss. She knew that her doom was imminent. Her heart raced uncontrollably, pounding against her chest as if desperately trying to escape its impending fate.

The mentor asked her students if any of them would like to recommend themselves for the upcoming practice session. Excitement filled the air as most of the girls eagerly raised their hands, their faces lit up with anticipation.

With a sinister smile, the mentor selected a student from the eager faces. The chosen student stepped forward, took down her hood, her eyes shined with expectations and determination. The mentor allowed the student to have her pick of weapon and piercing spot, relishing in the power dynamics that played out before her.

The student's gaze lingered over the arsenal of deadly tools, selecting a weapon with a menacing aura. She ran her fingers along the blade, savoring the anticipation that filled the room. With a wicked grin, she turned to face the captive girl, her voice dripping with delight.

"I choose the serrated dagger," the student declared, her voice tinged with a chilling excitement. "And I want to strike at the apex of her heart, just like the mentor I admire."

The captive girl's eyes widened in terror, her breath catching in her throat. The mentor's own smile widened, seeing the fear etched across the captive's face. She nodded approvingly, allowing the student to proceed with her choice.

The student approached the captive girl, her movements deliberate and calculated. The air grew heavy with tension as the serrated dagger glinted ominously in her hand. The captive girl's heart was beating in an insane rhythm, facing the incoming intent to kill with full of fear and despair.

As the student positioned herself, the mentor watched intently. Her eyes glimmering with a twisted joyous. The student's hand trembled with anticipation, staring at the throbbing point below the left breast of the shivering young thief. Her blade poised to strike. The captive girl's body tensed, her eyes locked on the weapon that would soon pierce her vulnerable flesh.

"Don’t blame me." whispered by the young assassin.

In one swift and merciless motion, the student thrust the serrated dagger right between the 4th and 5th rib, torn the captive girl's heart from the apex. The room seemed to freeze in that moment, the sound of the blade piercing flesh echoing through the air.

The captive girl let out a choked gasp, her eyes widened with agony. Her body kneeled down, convulsing with the searing pain that seeped through her being.

"Come, my dear," the mentor held up the young thief, and let the outstanding student to listen to her last heaving chest. "Remember this faltering heart sound, representing our power, and the fragile of life." Her desperate heartbeat, staggered with the spurting sound of blood, echoed in the mind of the student.

Her heart, the very core of her existence, reacted with a final surge of desperation. It beat wildly, as if fighting against the intrusion, a futile attempt to cling to life. But the cruel reality of the situation prevailed, and with each weakening beat, the girl's life force slipped away.

The mentor watched with a twisted satisfaction as the young thief's body slumped, lifeless and still. The room fell into an eerie silence. The mentor's eyes gleamed with a sense of accomplishment, reveling in the darkness that had unraveled within her students.

"Observe, my dear fellow students," wiped the stains on her student’s cheek, she declare to everyone with determination. "This is what we have, the power deciding life and death. But remember, the fleeting nature of life binds us all. We have to be skilled to avoid becoming the next fallen heart."

The End

135 notes

·

View notes

Text

As firms increasingly rely on artificial intelligence-driven hiring platforms, many highly qualified candidates are finding themselves on the cutting room floor. Body-language analysis. Vocal assessments. Gamified tests. CV scanners. These are some of the tools companies use to screen candidates with artificial intelligence recruiting software. Job applicants face these machine prompts – and AI decides whether they are a good match or fall short. Businesses are increasingly relying on them. A late-2023 IBM survey of more than 8,500 global IT professionals showed 42% of companies were using AI screening "to improve recruiting and human resources". Another 40% of respondents were considering integrating the technology. Many leaders across the corporate world hoped AI recruiting tech would end biases in the hiring process. Yet in some cases, the opposite is happening. Some experts say these tools are inaccurately screening some of the most qualified job applicants – and concerns are growing the software may be excising the best candidates. "We haven't seen a whole lot of evidence that there's no bias here… or that the tool picks out the most qualified candidates," says Hilke Schellmann, US-based author of the Algorithm: How AI Can Hijack Your Career and Steal Your Future, and an assistant professor of journalism at New York University. She believes the biggest risk such software poses to jobs is not machines taking workers' positions, as is often feared – but rather preventing them from getting a role at all.

98 notes

·

View notes

Text

Anti-AI Research Project - Planning Survey

Hi everyone! Back in May, I shared this post about a friend's university project aiming to create an anti-AI tool for use in audio work/voice acting.

Her project has been approved! Planning is now underway, so she's created a first-pass anonymous survey for interested participants to fill out, particularly those that deal directly with audio in any form.

These responses won't be used as part of the final data generated from the research, but is an initial check into the community that will be useful throughout the process. It should take about 5 minutes, and you can find the survey here, so please fill it out if you get a chance!

To stay updated with her work, you can join the research Discord server, or we've set up a mailing list for those that would just prefer to have updates emailed directly to them.

51 notes

·

View notes

Text

Very good article on AI and the unsustainable pace of the self-publishing industry:

Lepp, who writes under the pen name Leanne Leeds in the “paranormal cozy mystery” subgenre, allots herself precisely 49 days to write and self-edit a book. This pace, she said, is just on the cusp of being unsustainably slow. She once surveyed her mailing list to ask how long readers would wait between books before abandoning her for another writer. The average was four months. Writer’s block is a luxury she can’t afford, which is why as soon as she heard about an artificial intelligence tool designed to break through it, she started beseeching its developers on Twitter for access to the beta test. […]

With the help of the program, she recently ramped up production yet again. She is now writing two series simultaneously, toggling between the witch detective and a new mystery-solving heroine, a 50-year-old divorced owner of an animal rescue who comes into possession of a magical platter that allows her to communicate with cats. It was an expansion she felt she had to make just to stay in place. With an increasing share of her profits going back to Amazon in the form of advertising, she needed to stand out amid increasing competition. Instead of six books a year, her revised spreadsheet forecasts 10.

65 notes

·

View notes

Text

AI’s Role in Redefining Corporate Strategy: Insights from Xp

Corporate strategy has never been more critical. Companies must navigate an array of challenges, from shifting consumer preferences and global competition to technological disruptions and regulatory changes. In this environment, the role of artificial intelligence (AI) in redefining corporate strategy has become increasingly pronounced. Leveraging AI-driven insights, companies can gain a competitive edge, anticipate market trends, and make informed decisions that drive long-term success. In this blog, we’ll explore the transformative impact of AI on corporate strategy and draw insights from Xp.

#market analysis#online survey#market research#market research surveys#consumerbehavior#ai survey#corporatestrategy#datainsights#market trends#market growth#deeper insights#actionable insights#ai driven decision making processes#consumer preferences#AI-powered risk management tools#AI-driven market segmentation#market dynamics#california news

0 notes

Text

studying and working in the business/management side of things has really put into perspective how big ai is getting and how normalized it's becoming. like it is being targeted at becoming a normal everyday tool for usage. a number of business professionals i work with and my professors too, have both, more often than not, just started telling people to "use chat gpt." like if you're trying to formulate a survey, they tell you to ask chat gpt a good set of questions that could be used. if you're trying to figure out the best way to categorize data, they tell you to ask chat gpt what they suggest. they've even started to suggest asking chat gpt how to word emails, and it's just ai this and ai that, and it's slightly concerning because as prevalent as technology is, eventually, you won't need people to write emails or break down data or create and send surveys at all. and it's like. i am feeding into a system that might one day make me jobless, but for the sake of efficiency and quality, i will just agree with this and go along. and so many students nod their heads and say okay !! for the sake of getting a good grade and slacking off, but really we are not getting taught how to conduct surveys and properly word tricky emails and break down data for categorizing, and it's just. very very wild, and i feel like i'm the only person in the classroom who holds this view bc apparently, only on tumblr do ppl see the dangers of ai, everyone else seems to think it's so cool 😭

134 notes

·

View notes

Note

Little conspiracy theory- what if WEBTOON is aware this is AI? I remember there was this thing where you could take a picture of yourself and with ai they would try imaging you in a popular webcomics style. As a person with darker skin, mine looked pretty iffy. What if WEBTOON is testing how far they can go with ai?

Not even really a conspiracy theory tbh, they have the selfie AI thing and the AI coloring tool. I think there's just a very real possibility that WT is moving towards AI-assisted and AI-made comics and, like many of their controversies over the years, they're just expecting us to accept it blindly. Of course, they haven't outright confirmed that yet, but odds are high considering the past instances of AI they've promoted, and I don't think they want to say anything outright about it because that'll open up a can of worms they aren't prepared to deal with.

Something something "it's a feature, not a bug".

Also yeah, definitely not surprised to hear that the AI selfie tool didn't do a good job at rendering your skin tone (although I am sorry you experienced that, it's so unfair to anyone who isn't one specific shade of white) I have dark-skinned characters who I tried to color in on their painter tool and it just assumed the darker tones were meant to be shadows, it literally just made them look like their faces had been spraypainted 😭 I made it a point in the survey I filled out about it that it needs to be able to handle non-white skin tones better than it is (because duh), but I don't think they've bothered refining it since then. It's kinda telling they've been training these AI tools purely on their Korean library which predominantly features light-skinned casts.

Webtoons/Naver seems to operate with a very specific audience in mind and they're not as inclusive as they try to pretend they are. I'm very done with their "monster of the week" business model where they're just constantly outdoing themselves on how shitty and cruel to artists they can be.

63 notes

·

View notes